Felo AI chat now supports free use of the O1 inference model

In the rapidly developing field of artificial intelligence,OpenAI launches a series of groundbreaking language models,Called o1 series。These models are designed to perform complex inference tasks,Making it a powerful tool for developers and researchers。In this blog post,We’ll explore how to effectively use OpenAI’s inference models,Focus on their capabilities、Limitations and best practices for implementation。

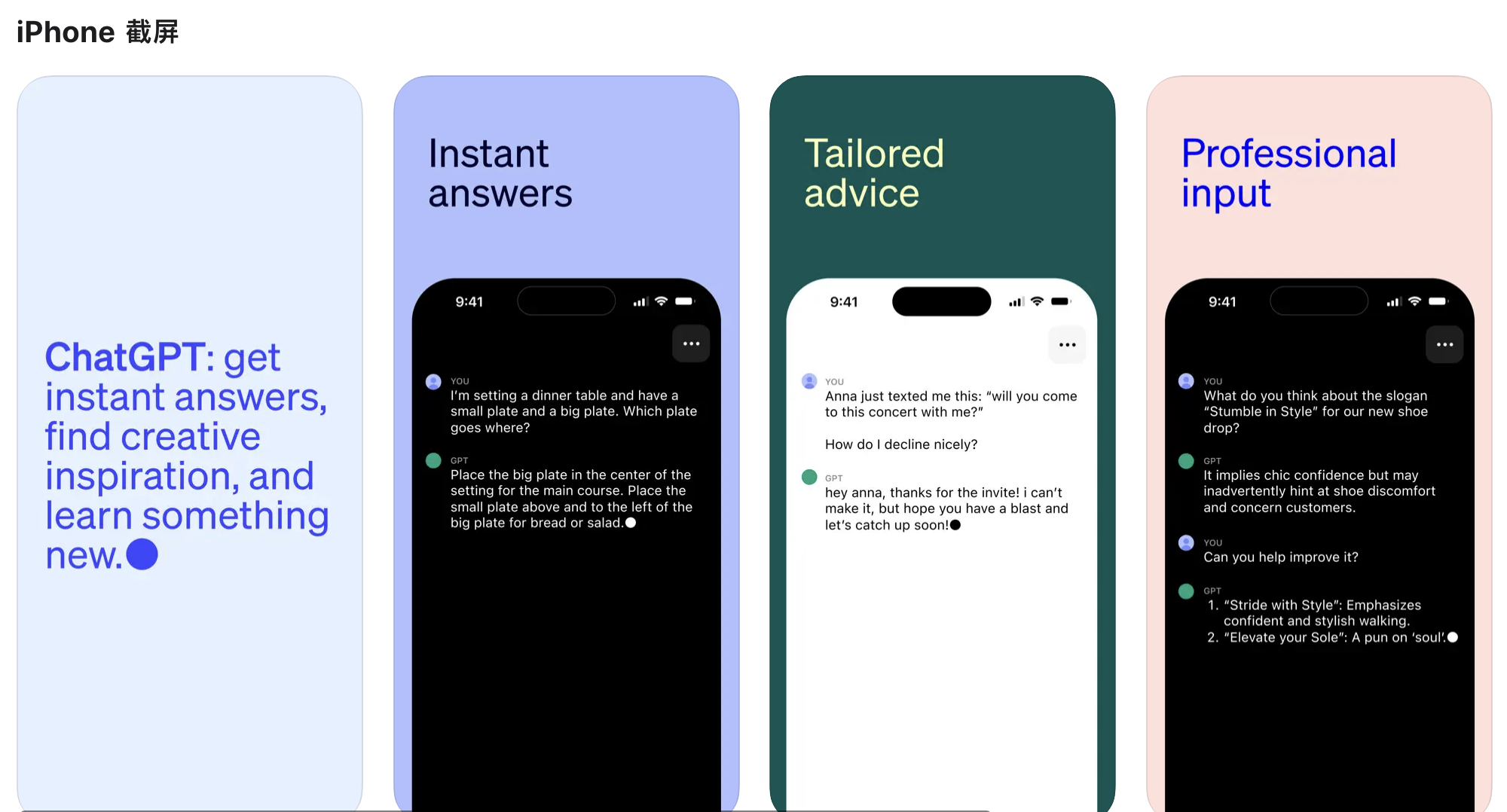

Felo AI Chat now supports free use of O1 inference models。Come and try it!

Understanding OpenAI o1 series models

The o1 series model is different from OpenAI’s previous language model iterations,Mainly due to its unique training method。They use reinforcement learning to enhance reasoning capabilities,enable them to think critically before generating a response。This internal thought process enables the model to generate long chains of reasoning,This is particularly beneficial for solving complex problems。

Main features of the OpenAI o1 model

1. **advanced reasoning**:The o1 model excels at scientific reasoning,Achieved impressive results on competitive programming and academic benchmarks。For example,They rank in the 89th percentile on Codeforces,and in physics、Demonstrate PhD-level accuracy in subjects such as biology and chemistry。

2. **two variations**:OpenAI offers two versions of the o1 model through its API:

– **o1-preview**:This is an early version,Aims to use broad common sense to solve difficult problems。

– **o1-mini**:A faster and more cost-effective variant,Particularly suitable for coding that does not require extensive common sense、math and science tasks。

3. **context window**:The o1 model has a sizeable context window of 128,000 markers,Allows for a wide range of input and reasoning。However,It is crucial to manage this context well to avoid hitting the tag limit。

Get started with the OpenAI o1 model

To start using the o1 model,Developers can access them through the OpenAI API’s chat completion endpoint。

Are you ready to improve your AI interactive experience? Felo AI Chat now offers the opportunity to explore cutting-edge O1 inference models,Totally free!

Free trial of o1 inference model。

Beta limitations of OpenAI o1 model

It should be noted that,The o1 model is currently in testing phase,This means there are some limitations to be aware of:

in testing phase,Many chat completion API parameters are not yet available。most notably:

- modal:Only supports text,Image not supported。

- Message type:Only supports user and assistant messages,System messages are not supported。

- streaming:Not supported。

- tool:Tools not supported、Function call and response format parameters。

- Logprobs:Not supported。

- other:

temperature、top_pandnfixed to1,andpresence_penaltyandfrequency_penaltyfixed to0。 - Helpers and batch processing:These models are not supported for use in the helper API or the batch API。

**Manage context window**:

Since the context window is 128,000 marks,The space must be managed effectively。Each completion has a maximum output tag limit,Includes inference and visible completion markers。For example:

– **o1-preview**:Up to 32,768 markers

– **o1-mini**:Up to 65,536 markers

OpenAI o1 model speed

To illustrate this point,We compared GPT-4o、o1-mini and o1-preview responses to a word reasoning question。Although GPT-4o provides the wrong answer,But both o1-mini and o1-preview answered correctly,Among them, the correct answer of o1-mini is about 3-5 times faster。

How to use GPT-4o、Choosing between o1-Mini and o1-Preview models?

**O1 Preview**:This is an early version of the OpenAI O1 model,Aims to use broad common sense to reason about complex problems。

**O1 Mini**:A faster and more affordable version of O1,Particularly good at coding、math and science tasks,Suitable for situations where extensive common sense is not required。

The O1 model provides significant improvements in inference,But it is not intended to replace GPT-4o in all use cases。

For image input required、function call orApplications with consistently fast response times,GPT-4o and GPT-4o Mini models are still the best options。However,If you are developing an application that requires deep inference and can tolerate long response times,The O1 model might be a good choice。

Tips for effective tips for o1-Mini and o1-Preview models

OpenAI o1 models work best when using clear and direct cues。some techniques,For example, a small number of example prompts or asking the model to "think step by step",May not improve performance,It may even hinder performance。Here are some best practices:

1. **Keep tips simple and direct**:When the model receives the brief、clear instructions,best effect,without extensive elaboration。

2. **Tips for avoiding chain thinking**:Since these models handle inference internally,So there’s no need to prompt them to “think step by step” or “explain your reasoning.”。

3. **Use delimiters to improve clarity**:Use triple quotes、Delimiters such as XML tags or section titles to clearly define different parts of the input,This helps the model correctly interpret each part。

4. **Limit additional context in retrieval augmentation generation (RAG)**:When providing additional context or documentation,Include only the most relevant information,to avoid overcomplicating the model's response。

Prices for o1-Mini and o1-Preview models。

o1 Mini and o1 Preview models are costed differently than other models,Because it includes the additional cost of inferential marking。

o1-mini pricing

$3.00 / 1M input mark

$12.00 / 1M output flag

o1-preview pricing

$15.00 / 1M input mark

$60.00 / 1M output flag

Manage o1-preview/o1-mini model costs

In order to control the cost of o1 series models,You can set a limit on the total number of tokens generated by the model using the `max_completion_tokens` parameter,Includes inference and completion marks。

in early models,The `max_tokens` parameter manages the number of tokens generated and the number of tokens visible to the user,Both are always the same。However,In o1 series,Marked due to internal reasoning,The total number of tags generated may exceed the number of tags displayed by the user。

Since some applications rely on `max_tokens` matching the number of tokens received from the API,The o1 series introduced `max_completion_tokens`,To specifically control the total number of tokens generated by the model,Includes inference and visible completion markers。This clear choice ensures that existing applications remain compatible with the new model。The `max_tokens` parameter continues to work like all previous models。

in conclusion

OpenAI’s o1 series of models represent a major advance in artificial intelligence,Particularly in terms of the ability to perform complex reasoning tasks。By understanding their abilities、Limitations and usage best practices,Developers can harness the power of these models to create innovative applications。As OpenAI continues to refine and expand the o1 series,We can expect more exciting developments in the field of AI-driven inference。Whether you're an experienced developer or just starting out,o1 models all offer unique opportunities to explore the future of intelligent systems。Happy coding!

Young AI Chat always provides you with free experience of advanced AI models from around the world。Click here to try it!

.webp)

No comments yet